Long-running Custom Code with Azure Data Factory using Durable Functions

I've written a new version of this article for Microsoft Fabric, but it works the same way on Azure Data Factory and Synapse Analytics. Feel free to check it out!

Azure Data Factory gives many out-of-the-box activities, but one thing it doesn’t have is to run custom code easily. The emphasis here is on easily because it only supports that through Azure Batch, which is a pain to manage, let alone make it work. The other option is to use Azure Functions, but Microsoft says on MSDN documentation that we have only 230 seconds to finish what we’re doing, otherwise ADF will timeout and break the pipeline.

So, how can we run a long (or longer than 230 seconds) job on ADF, say extracting tar file contents (their example with normal Azure Functions is here)? The solution is to make it a background work with Durable Functions.

Durable Functions are running on the same platform as normal Azure Functions, but they run asynchronously. When you trigger a Durable Function, it creates a background process and the gives you a few URLs that you can interact with that process; including one to query its status. When you call it, it returns the status of the background job as well as the data if it’s finished.

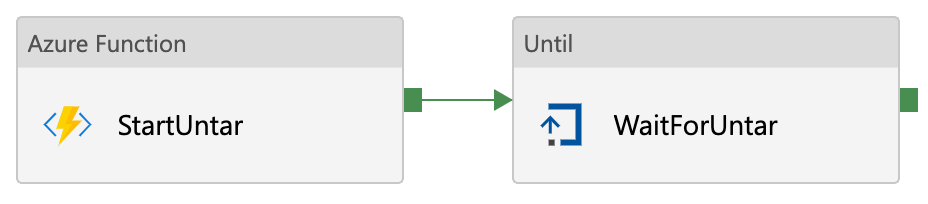

The idea is to trigger a Durable Function through an HTTP endpoint, wait for it to finish and then get the result. Here’s how it looks like:

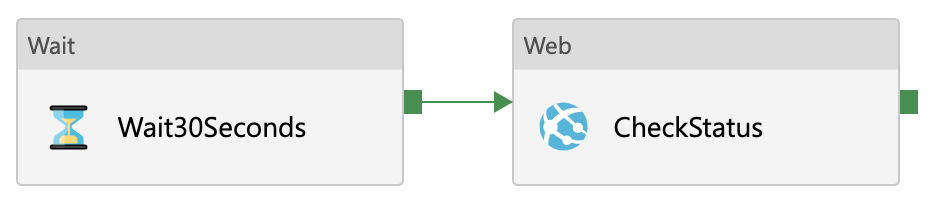

And here’s how it looks inside the Until activity:

Here’s the process:

- First, we trigger our Durable Function through an HTTP trigger using Azure Function activity.

- Then with the Until activity, we check status of that function.

- The Wait activity waits around 30 seconds (or different, up to you) to let function to be executed.

- The Web activity makes a request to the statusQueryUrl that Azure Function activity returns, by calling

@activity('StartUntar').output.statusQueryGetUri

- Until activity checks the result of CheckStatus Web activity with expression

@not(or(equals(activity('CheckStatus').output.runtimeStatus, 'Pending'), equals(activity('CheckStatus').output.runtimeStatus, 'Running'))) - It repeats until the function is finished or failed, or until it times out (set on the Timeout property)

In this scenario, the Durable Function untars the file and keeps the contents in a storage account, which returns those storage URLs in the HTTP response body. The next step may be to copy those files into a Data Lake using a ForEach with Copy, or whatever the process requires.

Although this example is to untar the file, you can apply the same logic whenever you need to run a custom background job on Azure Data Factory; like converting an XML file to JSON, because ADF doesn't support XML (this will be another blog post).